Tech News

IBM Sets the Course to Build World’s First Large-Scale, Fault-Tolerant Quantum Computer at New IBM Quantum Data Center

IBM unveiled its path to build the world’s first large-scale, fault-tolerant quantum computer, setting the stage for practical and scalable quantum computing.

Delivered by 2029, IBM Quantum Starling will be built in a new IBM Quantum Data Center in Poughkeepsie, New York and is expected to perform 20,000 times more operations than today’s quantum computers. To represent the computational state of an IBM Starling would require the memory of more than a quindecillion (10^48) of the world’s most powerful supercomputers. With Starling, users will be able to fully explore the complexity of its quantum states, which are beyond the limited properties able to be accessed by current quantum computers.

IBM, which already operates a large, global fleet of quantum computers, is releasing a new Quantum Roadmap that outlines its plans to build out a practical, fault-tolerant quantum computer.

“IBM is charting the next frontier in quantum computing,” said Arvind Krishna, Chairman and CEO, IBM. “Our expertise across mathematics, physics, and engineering is paving the way for a large-scale, fault-tolerant quantum computer — one that will solve real-world challenges and unlock immense possibilities for business.”

A large-scale, fault-tolerant quantum computer with hundreds or thousands of logical qubits could run hundreds of millions to billions of operations, which could accelerate time and cost efficiencies in fields such as drug development, materials discovery, chemistry, and optimization.

Starling will be able to access the computational power required for these problems by running 100 million quantum operations using 200 logical qubits. It will be the foundation for IBM Quantum Blue Jay, which will be capable of executing 1 billion quantum operations over 2,000 logical qubits.

A logical qubit is a unit of an error-corrected quantum computer tasked with storing one qubit’s worth of quantum information. It is made from multiple physical qubits working together to store this information and monitor each other for errors.

Like classical computers, quantum computers need to be error corrected to run large workloads without faults. To do so, clusters of physical qubits are used to create a smaller number of logical qubits with lower error rates than the underlying physical qubits. Logical qubit error rates are suppressed exponentially with the size of the cluster, enabling them to run greater numbers of operations.

Creating increasing numbers of logical qubits capable of executing quantum circuits, with as few physical qubits as possible, is critical to quantum computing at scale. Until today, a clear path to building such a fault-tolerant system without unrealistic engineering overhead has not been published.

The Path to Large-Scale Fault Tolerance

The success of executing an efficient fault-tolerant architecture is dependent on the choice of its error-correcting code, and how the system is designed and built to enable this code to scale.

Alternative and previous gold-standard, error-correcting codes present fundamental engineering challenges. To scale, they would require an unfeasible number of physical qubits to create enough logical qubits to perform complex operations – necessitating impractical amounts of infrastructure and control electronics. This renders them unlikely to be able to be implemented beyond small-scale experiments and devices.

A practical, large-scale, fault-tolerant quantum computer requires an architecture that is:

- Fault-tolerant to suppress enough errors for useful algorithms to succeed.

- Able to prepare and measure logical qubits through computation.

- Capable of applying universal instructions to these logical qubits.

- Able to decode measurements from logical qubits in real-time and can alter subsequent instructions.

- Modular to scale to hundreds or thousands of logical qubits to run more complex algorithms.

- Efficient enough to execute meaningful algorithms with realistic physical resources, such as energy and infrastructure.

Today, IBM is introducing two new technical papers that detail how it will solve the above criteria to build a large-scale, fault-tolerant architecture.

The first paper unveils how such a system will process instructions and run operations effectively with qLDPC codes. This work builds on a groundbreaking approach to error correction featured on the cover of Nature that introduced quantum low-density parity check (qLDPC) codes. This code drastically reduces the number of physical qubits needed for error correction and cuts required overhead by approximately 90 percent, compared to other leading codes. Additionally, it lays out the resources required to reliably run large-scale quantum programs to prove the efficiency of such an architecture over others.

The second paper describes how to efficiently decode the information from the physical qubits and charts a path to identify and correct errors in real-time with conventional computing resources.

From Roadmap to Reality

The new IBM Quantum Roadmap outlines the key technology milestones that will demonstrate and execute the criteria for fault tolerance. Each new processor in the roadmap addresses specific challenges to build quantum systems that are modular, scalable, and error-corrected:

- IBM Quantum Loon, expected in 2025, is designed to test architecture components for the qLDPC code, including “C-couplers” that connect qubits over longer distances within the same chip.

- IBM Quantum Kookaburra, expected in 2026, will be IBM’s first modular processor designed to store and process encoded information. It will combine quantum memory with logic operations — the basic building block for scaling fault-tolerant systems beyond a single chip.

- IBM Quantum Cockatoo, expected in 2027, will entangle two Kookaburra modules using “L-couplers.” This architecture will link quantum chips together like nodes in a larger system, avoiding the need to build impractically large chips.

Together, these advancements are being designed to culminate in Starling in 2029.

Tech News

POLYNOME AI ACADEMY AND ABU DHABI SCHOOL OF MANAGEMENT EXPAND CAIO PROGRAM, TAP GLOBAL TECH LEADERS

Polynome AI Academy and ADSM have unveiled the expanded global list of instructors for the second cohort of their Executive Program for Chief AI Officer (CAIO), featuring leaders from NVIDIA, Mubadala, BCG, G42, AI71, and leading research institutions.

The intensive program, running April 10–21, in Abu Dhabi, was created in response to a growing need among governments and large enterprises for structured AI leadership. It aims to equip Chief AI Officers and senior executives with the governance frameworks, operating models, and decision-making structures required to lead AI at both organizational and national scale.

“The first cohort confirmed what we’ve long believed: the CAIO role requires a dedicated program built for the realities of leading AI at scale,” said Alexander Khanin, Founder of Polynome Group. “Executives came to Abu Dhabi and left with actionable strategies they are already putting into practice. The tools are ready, and by 2027, AI is expected to guide half of all business decisions. The focus now is on equipping organizations with the framework to confidently execute AI-driven decisions. Cohort 2 builds on this momentum with a refined curriculum and fresh global perspectives.”

“The first cohort demonstrated the demand we anticipated; top executives across the region recognize that AI strategy cannot simply be delegated,” commented Dr. Tayeb Kamali, Chairman of Abu Dhabi School of Management. “The program continues to evolve, providing an immersive experience that equips leaders with the skills and insights to navigate AI adoption successfully and translate technological potential into real business impact.”

Inaugural Cohort: Impact & Insights

The first Executive Program for Chief AI Officer, held in November 2025 at Abu Dhabi School of Management, enrolled 35 C-suite executives and senior technology leaders. Participants completed 10 modules covering AI strategy, sovereign AI infrastructure, governance frameworks, agentic systems, Arabic NLP, AI investment strategy, and enterprise deployment methodology — combined with site visits to the UAE Cybersecurity Council, Core42’s Khazna Data Centers, and ADNOC, as well as executive roundtables with policymakers.

“The Executive Chief AI Program is unlike any course I’ve attended,” said Dr. Noura AlDhaheri, Chairman, DNA Investments. “It brings us directly to the AI creators, experts, and leaders, giving insight into the real challenges and the evolving landscape of AI. One of the most important lessons is that this field is constantly changing, so we must continually reinforce our knowledge and update our teams. AI is set to transform the way we do business; it’s a truly historic moment, and staying ahead is essential.”

The Global AI Experts Driving Cohort 02

The confirmed instructors list for Cohort 02 brings together leading voices from across the global AI ecosystem, spanning sovereign investment, national-scale AI architecture, enterprise strategy, and frontier research. Among confirmed instructors are Dr. George Tilesch, Founder & President of PHI Institute for Augmented Intelligence; Dr. Andrew Jackson, Group Chief AI Officer at G42; Prof. Merouane Debbah, Professor & 6G Lab Director at Khalifa University; Prof. Nizar Habash, Professor at New York University Abu Dhabi; Dr. John Ashley, Chief Architect at AI Nations and Director of NVIDIA AI Technology Centers; Charbel Aoun, Smart City & Spaces Director – EMEA at NVIDIA; Jean-Christophe Bernardini, Partner & Managing Director at Boston Consulting Group (BCG); Faris Al Mazrui, Head of Technology at Mubadala Investment Company; Chiara Marcati, Chief AI Advisory and Business Officer at AI71; Jorge Colotto, Founder and CEO of AIdeology.ai. Additional instructors will be announced in the coming weeks; and Marco Tempest, Director of Innovation Hub at ETH Zürich.

Program Structure

The Executive Program for Chief AI Officer is a 10-day intensive comprising 10 modules, executive seminars, case labs, operating model workshops, site visits to UAE AI institutions, including Core42’s Khazna data center, policymaker roundtables, and lifetime access to the CAIO alumni network. The program is designed for CAIOs, CTOs, CIOs, CISOs, public sector advisors, and senior digital transformation executives.

Tech News

NEMETSCHEK AND PRINCE SULTAN UNIVERSITY PARTNER TO EMPOWER THE NEXT GENERATION OF DIGITAL AEC TALENT IN SAUDI ARABIA

Strategic partnership aligns with the goals of Saudi Vision 2030 to advance skills development, innovation and entrepreneurship across the AEC and Media & Entertainment sectors Nemetschek Arabia, part of the Nemetschek Group, one of the world’s leading software providers for the Architecture, Engineering, Construction and Operations (AEC/O) industry, has entered into a strategic partnership with Prince Sultan University (PSU), one of Saudi Arabia’s leading private higher education institutions, to support the development of future-ready talent and accelerate innovation across the Kingdom.

The partnership reflects a shared commitment to advancing education, technology innovation, entrepreneurship and workforce development across the Architecture, Engineering, Construction, as well as Media and Entertainment sectors. Through this collaboration, Nemetschek Group and PSU will explore joint initiatives designed to strengthen skills development, expand academic-industry engagement and prepare students for the evolving demands of a digitally enabled economy.

Both parties will work together to implement programming that supports skills development, entrepreneurship and technology adoption, including the potential establishment of a joint accelerator program. The collaboration will also focus on creating opportunities for workshops, capacity-building initiatives, applied learning programs and joint outreach activities, while increasing awareness of each institution’s academic offerings and international programs.

A key pillar of the partnership is the integration of Nemetschek Group’s Global Academic Program, which empowers the next generation of AEC/O leaders by providing students with access to the same cutting-edge digital tools used by industry professionals. The program is designed to bridge the gap between the classroom and the field, fostering entrepreneurial thinking, scientific rigor and a strong sense of societal responsibility. It ensures that graduates entering the workforce are equipped to make an immediate and meaningful impact.

Yves Padrines, Chief Executive Officer of the Nemetschek Group, noted that empowering the next generation of talent is central to Nemetschek’s long-term vision and to the future of the built environment. “Saudi Arabia’s Vision 2030 places people, knowledge and innovation at the heart of national transformation, and this partnership with Prince Sultan University reflects our commitment to contributing to that ambition. By working closely with leading academic institutions, we are helping to develop the digital skills, entrepreneurial mindset and technical excellence required to shape a more sustainable and resilient future.”

Muayad Simbawa, Managing Director of Nemetschek Arabia, added: “This partnership represents an important step in strengthening the connection between academia and industry in the Kingdom. By bringing Nemetschek’s global expertise and academic programs to Prince Sultan University, we are supporting students with practical, industry-relevant skills while nurturing innovation and leadership. It is through partnerships like this that we can help build a highly skilled, future-ready workforce aligned with Saudi Arabia’s Vision 2030.”

Speaking on the impact of the partnership on student and their future careers, Dr. Abdulhakim Almajid, Dean of the College of Engineering at Prince Sultan University, explained: “Prince Sultan University is dedicated to providing our students with a world-class education that meets the highest international standards. Partnering with the Nemetschek Group allows us to further enhance our curriculum with industry-leading technology, as well as expose our students to real-world industry practices. This collaboration will provide our students with a competitive edge, fostering innovation and preparing them to contribute significantly to the Kingdom’s flourishing engineering and media sectors.”

The partnership underscores Nemetschek Group’s continued investment in talent development across the Middle East and its commitment to supporting national priorities through education, innovation and long-term ecosystem building.

Tech News

PNY STRENGTHENS ITS TEAMS IN THE MIDDLE EAST AND SAUDI ARABIATO SUPPORT STRATEGIC GROWTH

PNY today announces the reinforcement of its commercial and marketing teams across the Middle East, with a particular focus on Saudi Arabia, a key strategic market for the expansion of its professional and consumer solutions businesses.

These recruitments are designed to support the acceleration of PNY’s expanding business around AI Factory initiatives and large-scale datacenter deployments. By strengthening its capabilities across computing, networking, storage, the full software stack, and the integration of NVIDIA and Vertiv solutions, PNY is positioning itself to deliver scalable, reliable end-to-end infrastructures that meet the evolving needs of enterprises as well as public and governmental organizations investing in AI and high-performance computing.

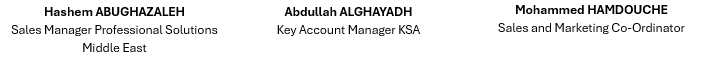

To support this growth strategy, PNY announces several key appointments:

Hashem Abughazaleh joins PNY to lead Professional Solutions Sales.

A seasoned Business Development and Enterprise Sales leader, Hashem brings over 15 years of experience across the Middle East and GCC. He has led high-level partnerships, defined enterprise sales strategies, and managed full solution lifecycles—from partner onboarding and technical customization to delivery.

Abdullah Algahyadh is appointed to drive the expansion of PNY’s presence in Saudi Arabia.

Born and raised in Riyadh, Abdullah holds a Bachelor’s degree in Business Information Systems and a Master’s degree in Entrepreneurship and Innovation Management from Middlesex University. His professional background includes business development, account management, and IT consulting. Abdullah brings strong local market knowledge and will play a key role in strengthening PNY’s footprint in the Kingdom.

Mohammed Hamdouche will lead the development of local marketing activities.

His background sits at the intersection of business, marketing, and technology, shaped by experience in large, tech-driven organizations across MENA markets. He brings expertise in digital marketing, with a strong interest in AI-driven technologies. Mohammed will actively support the growth of consumer solutions (a complete ecosystem of NVIDIA® GeForce® graphics cards, SSD components, and memory modules), storage ranges (USB flash drives and memory cards), as well as professional solutions.

“With these strategic hires, PNY is reinforcing its commitment to the Middle East and Saudi Arabia. We are strengthening our proximity to partners and customers, while helping them embrace innovative technologies to meet the region’s growing needs in digital transformation, AI, and high-performance computing.” – Jérôme Bélan, CEO at PNY Technologies EMEA

-

Tech News2 years ago

Tech News2 years agoDenodo Bolsters Executive Team by Hiring Christophe Culine as its Chief Revenue Officer

-

VAR10 months ago

VAR10 months agoMicrosoft Launches New Surface Copilot+ PCs for Business

-

News10 years ago

SENDQUICK (TALARIAX) INTRODUCES SQOOPE – THE BREAKTHROUGH IN MOBILE MESSAGING

-

Tech Interviews2 years ago

Tech Interviews2 years agoNavigating the Cybersecurity Landscape in Hybrid Work Environments

-

Tech News7 months ago

Tech News7 months agoNothing Launches flagship Nothing Phone (3) and Headphone (1) in theme with the Iconic Museum of the Future in Dubai

-

VAR1 year ago

VAR1 year agoSamsung Galaxy Z Fold6 vs Google Pixel 9 Pro Fold: Clash Of The Folding Phenoms

-

Tech News2 years ago

Tech News2 years agoBrighton College Abu Dhabi and Brighton College Al Ain Donate 954 IT Devices in Support of ‘Donate Your Own Device’ Campaign

-

Editorial1 year ago

Editorial1 year agoCelebrating UAE National Day: A Legacy of Leadership and Technological Innovation